HomeRobotic Database - Robotic platform | TERRINet

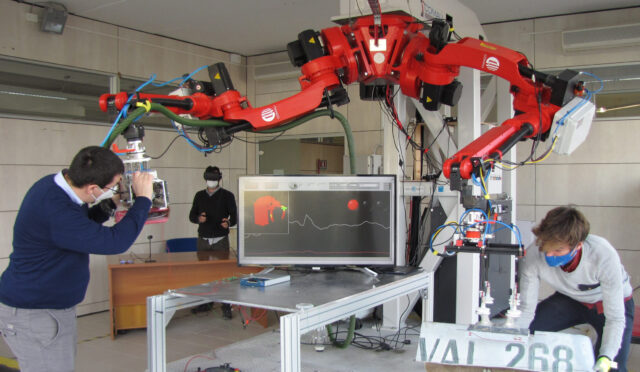

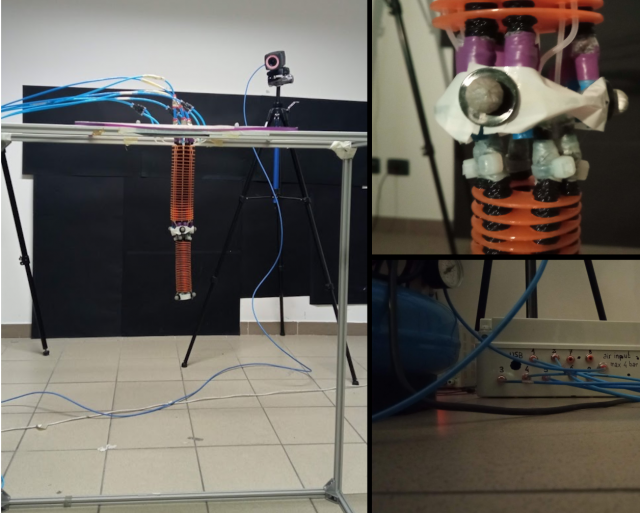

MBZIRC Hexarotor

Self-designed hexarotor, controlled with a Pixhawk (Px4) autopilot. An intel Nuc (I5) is also embedded for more computational capability. It works with ROS and it can be simulated with Gazebo. Payload includes among others: laser sensors, GPS, stereo camera and an electromagnet. Self-designed robotics arms are also used in this platform. This provides a multitask aerial robot.

Key features:

- MTOW: 10kg

- Embedded sensors

- 2 radio links for communications (Ubiquiti)

- Endurance: 12 minutes

- Weight: 6 kg, 7.5 kg with arms

- Max speed: 5m/s Horizontal, 2m/s Vertical

Possible applications:

- Multipurpose aerial cooperation for structure assemble

- Object grabbing in inaccessible locations

- Use of tools for aerial repairs

- Obstacles detection and removal

- Load transportation

Technical specifications

| Degrees of freedom for the robotic arm: | 6 |

| Average speed: | 2m/s Horizontal, 1m/s Vertical |

| Altitude: | 20m (software limit) |

| Power supply: | 6S LiPo |

| Interface: | Ubuntu/ROS |

| Weight: | 6kg |

| Autopilot: | Pixhawk (PX4) |

Access information

| Corresponding infrastructure | Universidad de Sevilla Robotics, Vision and Control Group |

| Location | Camino de los Descubrimientos, |

| Unit of access | Working day |

Access history

MSFOC-ESACMS - Multi-Source Sensor Fusion for Object Classification and Enhanced Situational Awareness within Cooperative Multi-Robot Systems

Qingqing Li

In urban and other dynamic environments, it is essential to be able to understand what is happening around and predict potentially dangerous situations for autonomous cars. This includes predicting pedestrian movement (e.g., they might cross the road unexpectedly), and take extra safety measures in scenarios where construction or reparation work is undergoing. This research focuses on enhancing an autonomous vehicle’s understanding of its environment by means of cooperation with other agents operating in the same environment. Through the fusion of information from different sensors placed in different devices and distributed machine learning algorithms to improve the autonomous cars safety and stability.

The proposed research is multidisciplinary. It will leverage recent advances in three-dimensional convolutional neural networks, sensor fusion, new communication architectures and the fog/edge computing paradigm. The project will affect how autonomous vehicles, and self-driving cars, in particular, understand and interact with each other and their environment.