HomeRobotic Database - Robotic platform | TERRINet

Motion Capture Facilities

Large experimental room equipped with an optoelectronic Motion Capture System to compute the position of reflective markers, force plates embedded in the floor to measure ground reaction forces, 6-axis force sensors to measure additional force contacts, wireless EMG to measure the activity of muscles. The system is provided with a processing software to reconstruct the whole-body dynamics and identify key elements of the musculoskeletal activity.

Key features:

- 16 wireless EMG sensors

- Ergocycle Lode excalibur with six cells sensors at the handlebars, the seat and the pedals

- 2 additional 6-axis force sensors to record additional contact forces (e.g, at the hand)

- Two force plates embedded in the floor to measure ground reaction forces

- 14 High resolution infrared cameras providing accurate positioning of reflecting markers

- Isokinetic ergometer Biodex to quantify mechanical joint power, torque and/or velocity

Possible applications:

- Transfer of human movement to humanoid robots

- Motion Ergonomics

- Human Motion analysis and Biomechanics

- Robot localization and state reconstruction

- Virtual reality

Technical specifications

| Wireless EMG: | Mini wave from Cometa with 16 sensors equipped with 3D accelerometers |

| force sensors: | 2 SENSIX K27x63F25270 6-axis force sensor, frequency 800Hz on each axis, simultaneous measurement extent: force-axes 1010 N, torque-axes 175 N.m |

| Embedded force platforms: | 2 AMTI force platforms (180*90) and (45*45) useful for gait and postural analysis |

| MOCAP: | Several Optoelectronic systems (Optitrack, Vicon, Motion Analysis) including a networks of high-resolution infrared cameras and advanced data processing software. |

| software: | Nexus 2.7 for 3D reconstruction using Vicon |

Access information

| Corresponding infrastructure | Centre national de la recherche scientifique The Department of Robotics of LAAS |

| Location | 7 Avenue du Colonel Roche, |

| Unit of access | Working day |

Access history

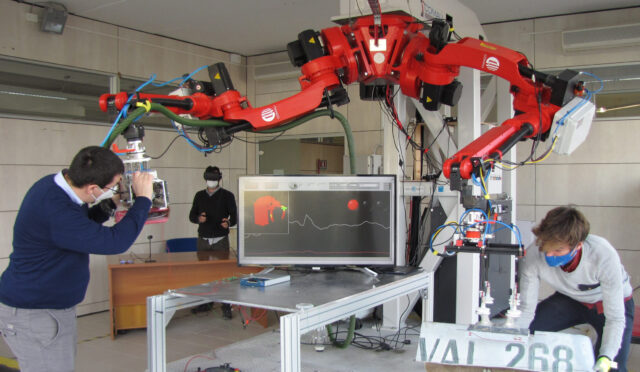

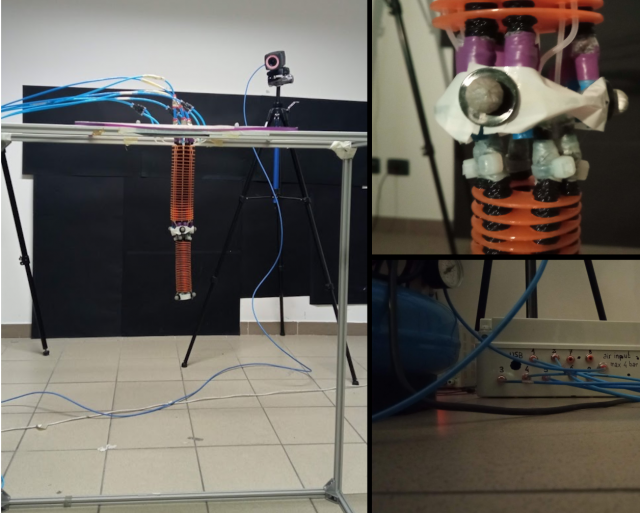

SMILE - Structure-level Multi-sensor Indoor Localization Experiment

Piotr Skrzypczynski, Jan Wietrzykowski

The SMILE project aimed at producing a new dataset that facilitates development of indoor localization methods through machine learning and evaluation of such methods. The core idea is that availability of multisensor and accurate data describing highly-structured indoor scenes would allow us to develop new, learning-based methods for scene description with structured geometric features, such as planar segments and edges. The scene description should support the task of agent localization. Obtaining such a description is challenging for passive cameras often applied in personal indoor localization or augmented reality setups. To address the difficulties, we automatically supervise the learning of scene representation with ground truth trajectories from a motion capture system, and depth data obtained from a 3-D laser scanner. Moreover, we embed in the dataset additional localization cues coming from Inertial Measurements Unit (IMU). The facility available at LAAS-CNRS within the TERRINet TNA program made it possible to collect the necessary data.

The result of the SMILE experiment is a large dataset of indoor data that should be useful in our research on data-driven methods for structure-level scene description. The fact that the main outcome of the experiment is a dataset, not direct scientific results, allows us to re-use the gained data many times for different, localization-related research. Moreover, within the TERRINet TNA program, we have gained experience on collecting large datasets with data analysis and generation of optimal experiment scenarios. Our long-term goal is to improve the PlaneLoc system (Wietrzykowski & Skrzypczynski, 2019) by enabling operation with passive vision systems which will make it much more practical for personal localization, large-scale augmented reality applications, and service robots. A proper dataset for learning and evaluation plays an essential role in achieving this goal, and participation in the TERRINet made these plans closer to reality.