HomeRobotic Database - Single Infrastructure | TERRINet

Address: Route Cantonale,

1015 Lausanne, Switzerland

Website

https://lis.epfl.ch/

Scientific Responsible

Auke Ijspeert

About

The Laboratory of Intelligent Systems takes inspiration from nature to design artificial intelligence and robots that are soft, fly, or evolve their own behaviors. Main research areas are the followings:

Aerial Robotics

We design flying robots, or drones, with rich sensory and behavioural abilities that can change morphology to smoothly and safely operate in different environments. These drones are conceived to work cooperatively and with humans to power civil applications in transportation, aerial mapping, agriculture, search-and-rescue, and augmented virtual reality.

Evolutionary Robotics

Evolutionary robotics takes inspiration from natural evolution to automatically design robot bodies and brains (neural networks) and to understand evolution of living systems. Topics of interest include open-ended evolution, evolution of social cooperation and competition, evolution of communication, evolution of multi-cellular robots, evolvable hardware.

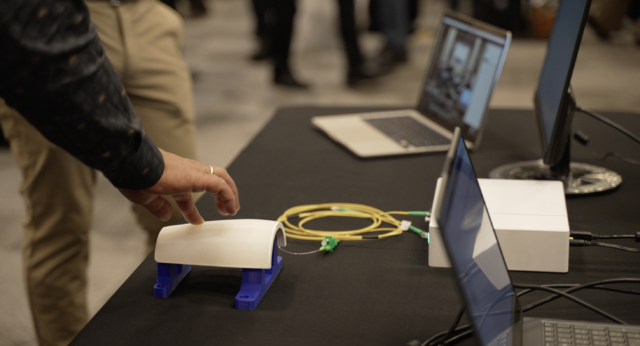

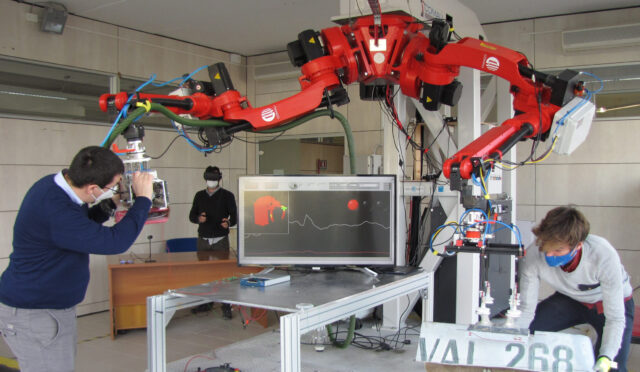

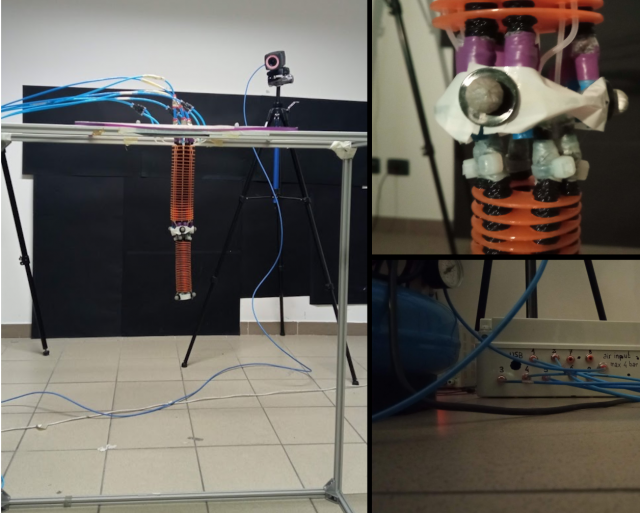

Soft Robotics

Soft robots can continuously change their shape, withstand strong mechanical forces, and passively adapt to their environment. The “softness” makes these robots safer and potentially more robust and versatile than their counterparts made of bolts and metal. Examples include soft grippers that manipulate complex shapes without complex software and insect-inspired compound eyes that conform to curved surfaces to provide large fields of views. Soft robotics technologies will find applications in mobile robotics, in wearable robotics, and in many other applications such as manufacturing, rehabilitation, and portable intelligent devices.

Wearable Robotics

We investigate and develop novel soft wearable robots, or exosuits, for natural interaction between humans and robots and for novel forms of augmented reality.

Traditional human-robot interaction often requires funnelling rich sensory-motor information through simplified computer interfaces, such as visual displays and joysticks, that demand cognitive effort. Instead, we need novel embodied interactions where humans and machines feel each other throughout the extension of their bodies and their rich sensory channels. We want to enable human experience of non-anthropomorphic morphologies and behaviors, such as flying, and novel forms of augmented reality through wearable robotics technologies.

Presentation of platforms

Available platforms

AR drone

Parrot AR.Drone 2.0 Elite Edition allows you to see the world from above and to share your photos and videos on social networks instantly. It manoeuvres intuitively with a smartphone or tablet and offers exceptional sensations right from take-off. Soft protective frame allows to use indoors and and in crowded environments.

“Birdly” flight simulator with haptic feedback

Visually immersed through a Head Mounted Display you are embedded in a high resolution virtual landscape. You command your flight with arms and hands which directly correlates to the wings (flapping) and the primary feathers of the bird (navigation). This input is reflected in the flight model of the bird and returned as a physical feedback by the simulator through nick, roll and heave movements. To evoke an intense and immersive flying adventure SOMNIACS vigorously relies on precise sensory-motor coupling and strong visual impact. Additionally Birdly® includes sonic, and wind feedback: according to the speed the simulator regulates the headwind from a fan mounted in front of you.

eBee drone

The senseFly’s eBee is a fully autonomous and easy-to-use mapping drone. Use it to capture high-resolution aerial photos you can transform into accurate orthomosaics (maps) & 3D models. The eBee package contains all you need to start mapping: RGB camera, batteries, radio modem and eMotion software.

Motion capture arena

This facility is a large room (~10x10x5.5 m volume) equipped with an array of Optitrack motion capture cameras and an accompanying software. The system allows to track up to 50 rigid objects with a position error less than 1 mm and with a frame rate of up to 200 fps. The cameras illuminate the scene with IR light so the use of special passive markers on the tracked objects is necessary. Most of the wall surface is covered with protective net. Initially designed for the experiments with flying robots this arena can be used in many other robotic applications. AR drone is available on site for experiments which is equipped with markers and with open source flight controller.

RoboGen

RoboGen™ is an open source platform for the co-evolution of robot bodies and brains. It has been designed with a primary focus on evolving robots that can be easily manufactured via 3D-printing and the use of a small set of low-cost, off-the-shelf electronic components. It features an evolution engine, and a physics simulation engine. Additionally it includes utilities for generating design files of body components to be used with a 3D-printer, and for compiling neural-network controllers to run on an Arduino microcontroller board.

LeQuad quadcopter

The leQuad quadcopter was designed in the Laboratory of Intelligent Systems, EPFL, which makes it easily reconfigurable and repairable. Having very lightweight and rigid frame it has a very good thrust/weight ratio allowing adding many additional components to basic configuration. It’s mostly used with our MoCap system indoors, but may be fitted with GPS sensor for outdoors experiments.